Chapter 13 Building good visualizations

Visualization should nearly always be the first step in analyzing simulation results. In the prior chapter, we saw a variety of examples primarily taken from published work. Those visualizations were not the initial ones created for those research projects. In practice, getting to a good visualization often requires creating many different graphs to look at different aspects of the data. From that pile of graphs, you would then curate and refine those that communicate the overall results most cleanly.

In our work, we find we often generate a series of R Markdown reports with comprehensive sets of charts targeting our various research questions. These initial documents are then discussed internally by the research team.

In this chapter we first discuss four essential tools that we frequently use to make these initial sets of graphs:

- Subsetting: Multifactor simulations can be complex and confusing. Sometimes it is easier to first explore a subset of the simulation results, such as a single factor level.

- Many small multiples: Plot many results in a single plot, with facets to break up the results by simulation factors.

- Bundling: Group the results by a primary factor of interest, and then plot the performance measure as a boxplot so you can see how much variation there is within that factor level.

- Aggregation: Average the performance measure across some of the simulation factors, so you can see overall trends with respect to the remaining factors.

To illustrate these tools, we walk through them using our running example of comparing methods for analyzing a Cluster RCT. We will start with an investigation of bias.

As a reminder, in our Cluster RCT example, we have three methods for estimating the average treatment effect: linear regression of the student-level outcome onto treatment (with cluster-robust standard errors); aggregation, where we regress the cluster-level average outcome onto treatment (with heteroskedastic robust standard errors); and multilevel modeling with random effects.

We want to know if these methods are biased for our defined estimand, which is the cluster-average treatment effect.

We have five simulation factors: school size (n_bar), number of schools (J), the intraclass correlation (ICC), the degree of variation in school size (alpha), and the relationship between school size and treatment effect (size_coef).

Once we go through the four core tools, we continue our evaluation of our simulation to show how we can assess other performance metrics of interest (true standard error, RMSE, estimated standard errors, and coverage) using these tools. We do not dive deeply into validity or power; see Chapter 20 for more on those measures.

13.1 Subsetting and Many Small Multiples

“Small Multiples” is when you make a plot that is actually a bunch of related plots. These plots can themselves be organized in a plot-like structure, with an x-axis depending on one categorical variable, and the y-axis another (this is a “facet grid”).

Generally, with small multiples, it is relatively straightforward to look at three simulation factors. This is because, with a facet grid, you can easily plot five aspects of your data: two for the facet x- and y-axis arrangement, one for the within-facet x-axis, and one for the within-facet y-axis, and one for color/line type. Of the five aspects, we usually use one of these for method (if we are comparing methods) and one for the performance metric (the outcome), giving three remaining aspects to assign to our simulation factors. In cases with more than three factors, an easy initial approach is pick three favorite factors and then filter all the simulation results down to specific levels for the remaining factors.

For example, for our simulation, we might target the ICC of 0.20, taking it as a “reasonable” value that, given our substance matter knowledge, we know is frequently found in empirical data.

We might further pick \(\bar{n} = 80\), as our middle level for cluster size.

This leaves three factors (J, size_coef, and alpha), allowing us to plot all of our simulation results for our subset.

We then decide to plot bias (our performance measure) as a function of J, with different lines for the different methods, and facets for the different levels of size_coef and alpha.

sres_sub <- sres %>%

filter( ICC == 0.20, n_bar == 80 )

ggplot( sres_sub, aes( as.factor(J), bias,

col=method, pch=method, group=method ) ) +

facet_grid( size_coef ~ alpha, labeller = label_both ) +

geom_point() + geom_line() +

geom_hline( yintercept = 0 ) +

theme_minimal()

Each point is one of our methods in one of our simulation scenarios. We are looking at the raw results. We connect the points with lines to help us see trends within each of the small multiples. The lines help us visually track which group of points goes with which.

We immediately see that when there is a lot of site variation (alpha = 0.80), and it relates to outcome (size_coef=0.2), linear regression is very different from the other two methods.

We also see that when alpha is 0 and size_coef is 0, we may also have a negative bias when $J = 5`.

Before we get too excited about this surprising result, we add MCSEs to our plot to see if this is a real effect or just noise:

ggplot( sres_sub, aes( as.factor(J), bias,

col=method, pch=method, group=method ) ) +

facet_grid( size_coef ~ alpha, labeller = label_both ) +

geom_point( position = position_dodge( width=0.3) ) +

geom_line(position = position_dodge( width=0.3)) +

geom_errorbar( aes( ymin = bias - 1.96*bias_mcse,

ymax = bias + 1.96*bias_mcse ),

position = position_dodge( width=0.3),

width = 0 ) +

geom_hline( yintercept = 0 ) +

theme_minimal()

Our confidence intervals exclude zero!

Our excitement mounts.

We next subset our overall results again to check all the scenarios with alpha = 0, and size_coef = 0 to see if this is a real effect.

We can still plot all our data, as for that subset of scenarios we still only have three factors, ICC, J_bar, and n_bar, left to plot.

null_sub <- sres %>%

dplyr::filter( alpha == 0, size_coef == 0 )

ggplot( null_sub, aes( ICC, bias,

col=method, pch=method, group=method ) ) +

facet_grid( J ~ n_bar, labeller = label_both ) +

geom_point( position = position_dodge( width=0.03) ) +

geom_line( position = position_dodge( width=0.03) ) +

geom_errorbar( aes( ymin = bias - 1.96*bias_mcse,

ymax = bias + 1.96*bias_mcse ),

position = position_dodge( width=0.03),

width = 0 ) +

geom_hline( yintercept = 0 ) +

theme_minimal()

It appears that our ICC=0.20 and n_bar=80 scenario was just a fluke.

Almost all of our confidence intervals easily cover 0, and we are not seeing any major trends.

Overall we conclude that indeed, when there is no variation, or when the variation does not relate to impact, nothing is particularly biased.

Interestingly, we further see that all methods seem to give identical estimates when there is no site variation, regardless of ICC, J, or n_bar (identical estimates is the easiest explanation of all the estimated biases and associated MCSEs across the three methods being identical).

Subsetting is a very useful tool, especially when the scope of the simulation feels overwhelming. And as we just saw, it can also be used as a quick validity check: subset to a known context where we know nothing exciting should be happening to verify that indeed nothing is there.

Subsetting allows for a deep dive into specific context. It also can make it easier to think through what is happening in a complex context; think of it as a flashlight, shining attention on one part of your overall simulation or another, to focus attention and reduce complexity. Sometimes we might even just report the results for a subset in our final analysis and put the analysis of the the remaining scenarios elsewhere, such as an online supplemental appendix. In this case, it would then be our job to verify that our reported findings on the main results indeed were echoed in the set-aside runs.

Subsetting is useful, but if you do want to look at all your simulation results at once, you need to somehow aggregate or group your results to make them all fit on the plot. We next present bundling, a way of keeping the core idea of small multiples to show all of the raw results, but now in a semi-aggregated way.

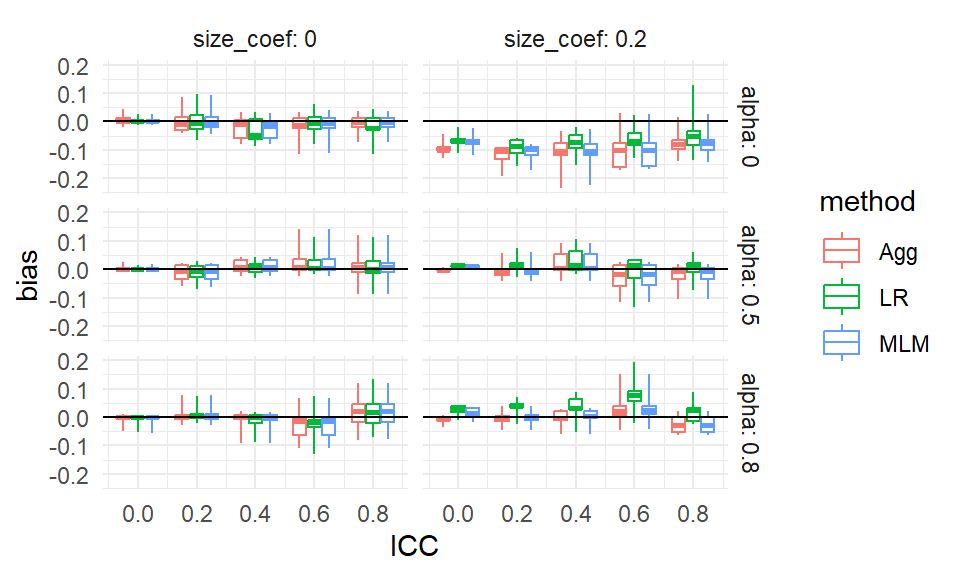

13.2 Bundling

When faced with many simulation factors, we can bundle the simulations into groups defined by a selected primary factor of interest, and then plot each bundle with a boxplot of the distribution of a selected performance criteria. Each boxplot shows the central measure of how well an estimator worked across a set of scenarios, along with a sense of how much that performance varied across those scenarios in the box. If the boxes are narrow, then we know that the variation across simulations within the box did not impact performance much. If the boxes are wide, then we know that the factors that vary within the box matter a lot for performance.

With bundling, we generally need a good number of simulation runs per scenario, so that the MCSE in the performance measures does not make our boxplots look substantially more variable (wider) than the truth. Consider a case where all the scenarios within a box have zero true bias; if the MCSE were large, the estimated biases would still vary and we would see a wide boxplot when we should not.

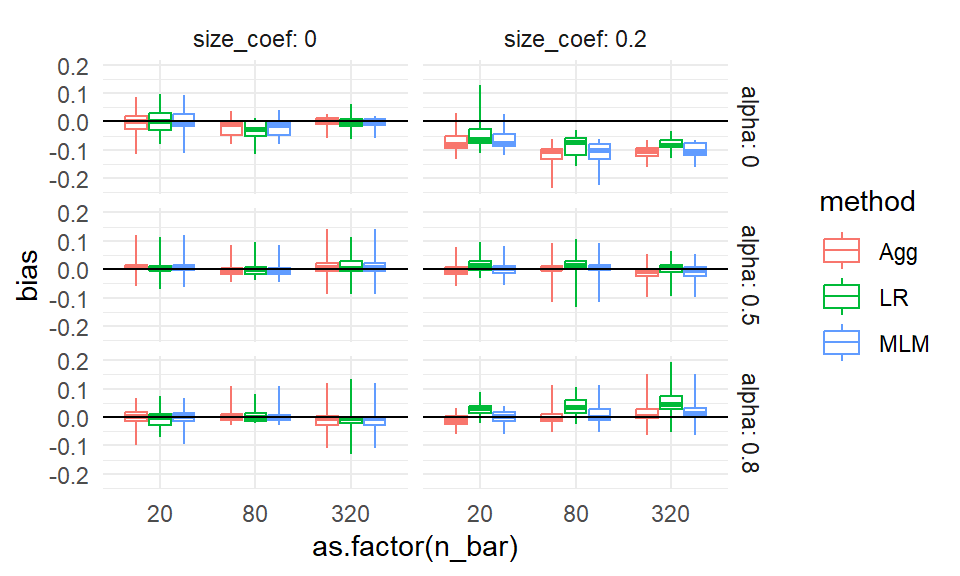

To illustrate bundling, we replicate our small subset figure from above, but instead of each point (with a given J',alpha, andsize_coef) just being the single scenario withn_bar=80andICC = 0.20`, we plot all the scenarios in a boxplot at that location.

We put the boxes for the three methods side-by-side to directly compare them:

ggplot( sres, aes( as.factor(J), bias, col=method,

group=paste0(method, J) ) ) +

facet_grid( size_coef ~ alpha, labeller = label_both ) +

geom_boxplot( coef = Inf, width=0.7, fill="grey" ) +

geom_hline( yintercept = 0 ) +

theme_minimal()

All of our simulation trials are represented in this plot.

Each box is a collection of simulation trials. E.g., for J = 5, size_coef = 0, and alpha = 0.8 each of the three boxes contains 15 scenarios representing the varying ICC and cluster size.

Here are the 15 results in the top right box for the Aggregation method:

filter( sres,

J == 5,

size_coef == 0,

alpha == 0.8,

method=="Agg" ) %>%

dplyr::select( n_bar, J, size_coef, ICC, alpha, bias, bias_mcse ) %>%

arrange( bias ) %>%

knitr::kable( digits = 2 )| n_bar | J | size_coef | ICC | alpha | bias | bias_mcse |

|---|---|---|---|---|---|---|

| 80 | 5 | 0 | 0.6 | 0.8 | -0.03 | 0.02 |

| 320 | 5 | 0 | 0.8 | 0.8 | -0.02 | 0.02 |

| 20 | 5 | 0 | 0.6 | 0.8 | -0.02 | 0.02 |

| 80 | 5 | 0 | 0.8 | 0.8 | -0.02 | 0.03 |

| 20 | 5 | 0 | 0.4 | 0.8 | -0.01 | 0.02 |

| 20 | 5 | 0 | 0.2 | 0.8 | -0.01 | 0.01 |

| 320 | 5 | 0 | 0.2 | 0.8 | 0.00 | 0.01 |

| 320 | 5 | 0 | 0.4 | 0.8 | 0.00 | 0.02 |

| 20 | 5 | 0 | 0.0 | 0.8 | 0.00 | 0.01 |

| 80 | 5 | 0 | 0.4 | 0.8 | 0.00 | 0.02 |

| 320 | 5 | 0 | 0.0 | 0.8 | 0.00 | 0.00 |

| 80 | 5 | 0 | 0.0 | 0.8 | 0.00 | 0.00 |

| 320 | 5 | 0 | 0.6 | 0.8 | 0.01 | 0.02 |

| 80 | 5 | 0 | 0.2 | 0.8 | 0.01 | 0.01 |

| 20 | 5 | 0 | 0.8 | 0.8 | 0.07 | 0.03 |

Our bias boxplot makes some trends clear.

For example, we see that there is no bias, on average, for any method when the size coefficient is 0 and alpha is 0, especially when \(J = 80\).

When the size coefficient is 0.2, we also see LR jump out from the others when alpha is not 0.

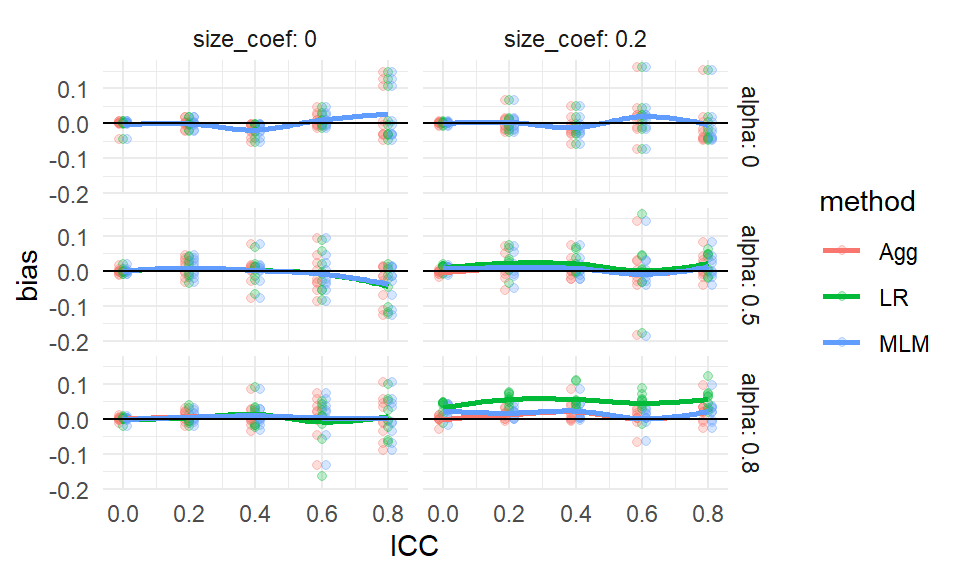

The apparent outliers (long tails) for some of the boxplots suggest that the two remaining factors (ICC and cluster size) could relate to the degree of bias. They could also be due to MCSE, and given that we primariy see these tails when \(J\) is small, this is a real concern. MCSE aside, a long tail means that some scenario in the box had a high level of estimated bias. We could try bundling along different aspects to see if either of the remaining factors (e.g., ICC) explains these differences. Here we try bundling cluster size and number of clusters.

ggplot( sres, aes( as.factor(alpha), bias, col=method,

group=paste0(method, alpha) ) ) +

facet_grid( size_coef ~ ICC, labeller = label_both ) +

geom_boxplot( coef = Inf, width=0.7, fill="grey" ) +

geom_hline( yintercept = 0 ) +

theme_minimal()

We have some progress now: the long tails are primarily when the ICC is high, but we also see that MLM has bias with ICC is 0, if alpha is nonzero.

We know things are more unstable in smaller samples sizes, so the tails could still be MCSE, with some of our bias estimates being large due to random chance. Or perhaps there is still some specific combination of factors that allow for large bias (e.g., perhaps small sample sizes makes our estimators more vulnerable to bias). In an actual analysis, we would make a note to investigate these anomalies later on.

In general, trying to group your simulation scenarios so that their boxes are generally narrow is a good idea; narrow boxes means that you have found a representation of the data where you know what is driving the variation in your performance measure, and that the factors bundled inside the boxes are less important. This might not always be possible, if all your factors matter; in this case the width of your boxes tells you to what extent the bundled factors matter relative to the factors explicitly present in your plot.

One might wonder, with only few trials per box, whether we should instead look at the individual scenarios. Unfortunately, that gets a bit cluttered:

ggplot( sres, aes( as.factor(alpha), bias, col= method,

group=paste0(alpha,ICC,method) ) ) +

facet_grid( size_coef ~ ICC, labeller = label_both ) +

geom_point( size = 0.5,

position = position_dodge(width=0.7 ) ) +

geom_hline( yintercept = 0 ) +

theme_minimal()

Using boxplots, even over such a few number of points, notably clarifies a visualization.

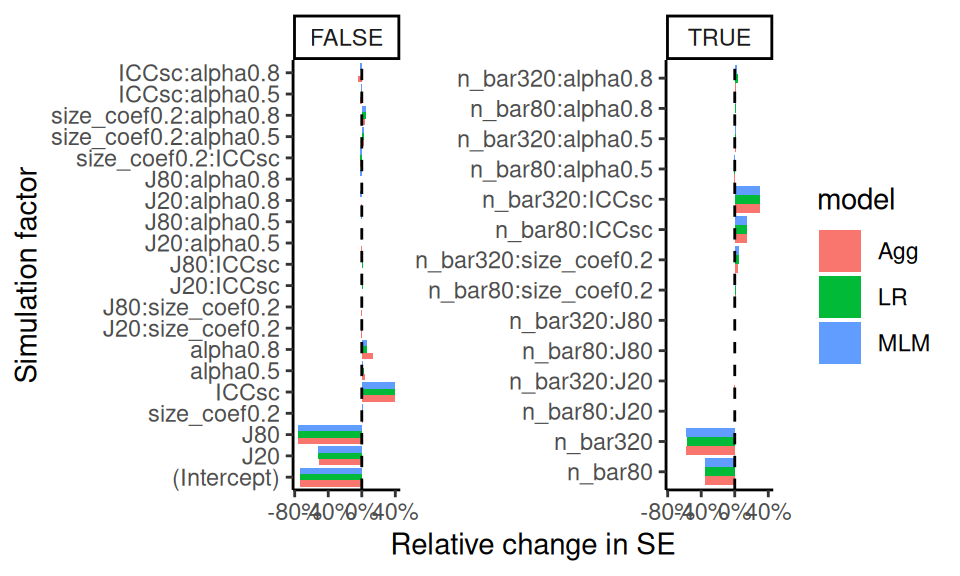

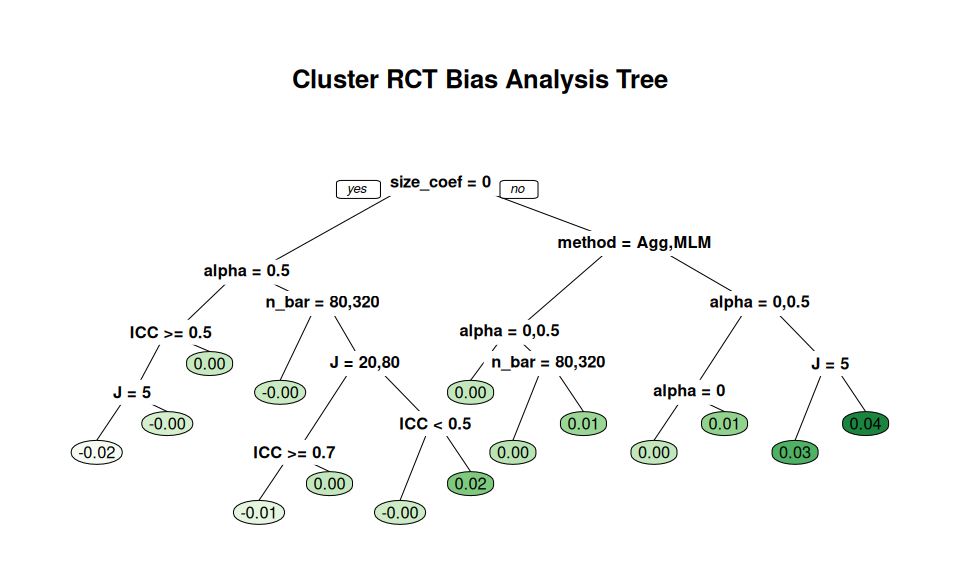

13.3 Aggregation

Boxplots can make seeing trends more difficult, as the eye is drawn to the boxes and tails, and the range of your plot axes can be large due to needing to accommodate the full tails and outliers of your results; this can compress the mean differences between groups, making them look small. They can also be artificially inflated, especially if the MCSEs are large. Instead of bundling, we can therefore aggregate, where we average all the scenarios within a box to get a single number of average performance. This will show us overall trends rather than individual simulation variation.

When we aggregate, and average over some of the factors, we collapse our simulation results down to fewer moving parts. Aggregation across factors is better than not having varied those factors in the first place! A performance measure averaged over a factor is a more general answer of how things work in practice than having not varied the factor at all.

For example, if we average across ICC and site variation, and see that our methods had different degrees of bias as a function of \(J\), we would know that the found trend is a general trend across a range of scenarios defined by different ICC and site variation levels, rather than a specific one tied to a single ICC and amount of site variation. Our conclusions would then be more general: if we had not explored more scenarios, we would not have any sense of how general our found trend might be.

That said, if some of our scenarios had no bias, and some had large bias, when we aggregated we would report that there is generally a moderate amount of bias. This would not be entirely faithful to the actual results. But when the initial boxplots show results generally in one direction or another, then aggregation can be quite faithful to the spirit of the results.

A major advantage of aggregation over the bundling approach is we can have fewer replications per scenario. If the number of replicates within each scenario is small, then the performance measures for each scenario is estimated with a lot of error; the aggregate, by contrast, will be an average across many more replicates and thus give a good sense of average performance. The averaging, in effect, gives a lot more replications per aggregated performance measure.

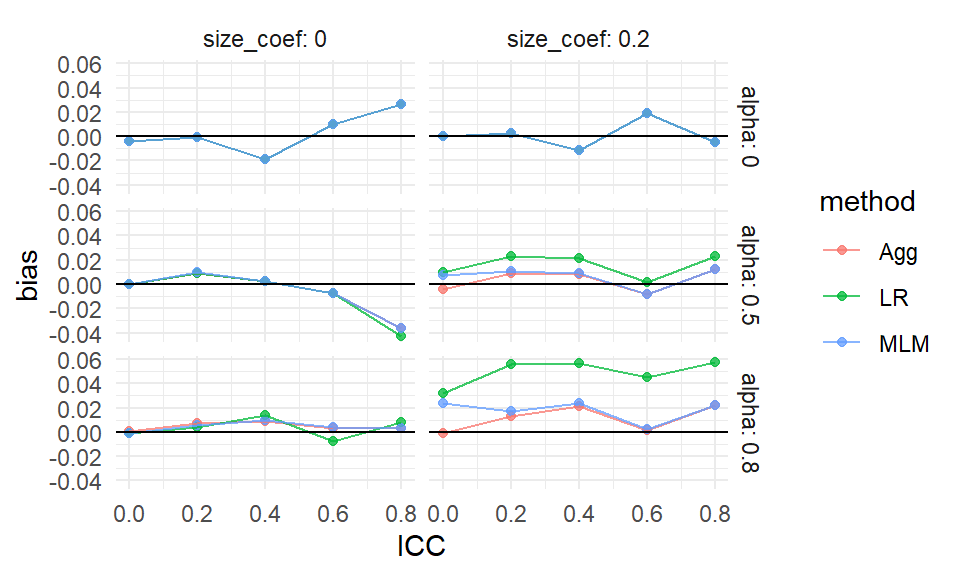

For our cluster RCT, we might aggregate our bias across our sample sizes as follows:

We now have a single bias estimate for each combination of size_coef, J, and alpha; we have collapsed 15 scenarios into one overall scenario that generalizes bias across different average cluster sizes and different ICCs. We can then plot, using many small multiples:

ggplot( ssres, aes( as.factor(J), bias, col=method, group=method ) ) +

facet_grid( size_coef ~ alpha, labeller = label_both ) +

geom_point( alpha=0.75 ) +

geom_line( alpha=0.75 ) +

geom_hline( yintercept = 0 ) +

theme_minimal()

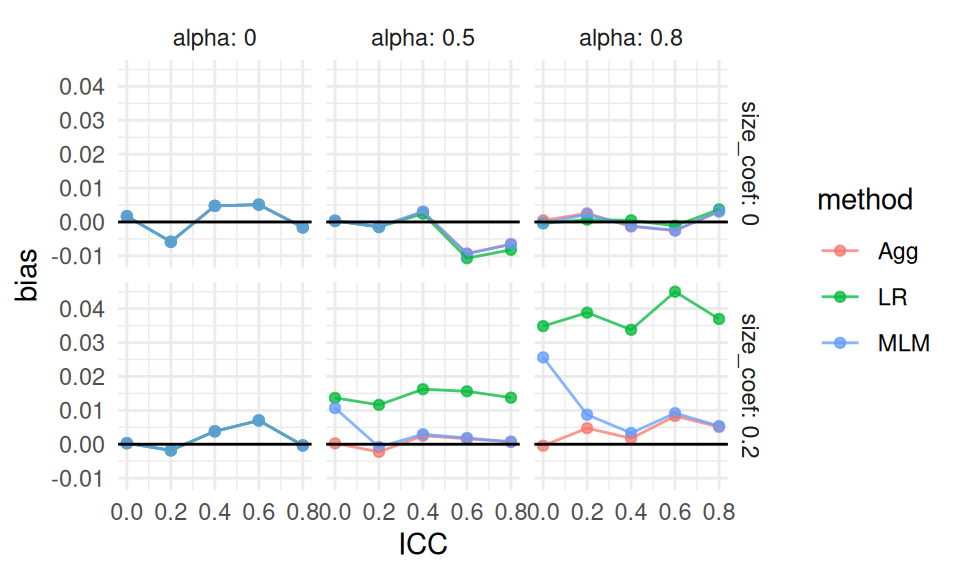

We now see quite clearly that as alpha grows, linear regression gets more biased if cluster size relates to average impact in the cluster (size_coef).

Our finding makes sense given our theoretical understanding of the problem—if size is not related to treatment effect, it is hard to imagine how varying cluster sizes would cause much bias.

We are looking at an interaction between our simulation factors: we only see bias for linear regression when cluster size relates to impact and there is variation in cluster size. We also see that all the estimators have near zero bias when there is no variation in cluster size or the cluster size does not relate to outcome, as shown by the top row and left column facets. Finally, we see the methods all likely give the same answers when there is no cluster size variation, given the overplotted lines on the left column of the figure.

We might take this figure as still too complex.

So far we have learned that MLM does seem to react to ICC, and that LR reacts to alpha and size_coef in combination.

More broadly, with many levels of a factor, as we have with ICC, we can let ggplot aggregate directly by taking advantage of geom_smooth().

This leads to the following:

Our story is fairly clear now: LR is biased when alpha is large and the cluster size relates to impact. MLM can be biased when ICC is low, if cluster size relates to impact (this is because it is driving towards person-weighting when there is little cluster variation).

Aggregation is powerful, but it can be misleading if you have scaling issues or extreme outliers. With bias, our scale is fairly well set, so we are good. But if we were aggregating standard errors over different sample sizes, then the larger standard errors of the smaller sample size simulations (and the greater variability in estimating those standard errors) would swamp the standard errors of the larger sample sizes. Usually, with aggregation, we want to average over something we believe does not change massively over the marginalized-out factors. To achieve this, we can often average over a relative measure (such as standard error divided by the standard error of some baseline method), which tend to be more invariant and comparable across scenarios. We will see more examples of this kind of aggregation later on.

13.3.0.1 Some notes on how to aggregate

Some performance measures are biased with respect to the Monte Carlo uncertainty. The estimated standard error, for example, is biased; the variance, by contrast, is not. The RMSE is biased, the MSE is not.

When aggregating, therefore, it is often best to aggregate the unbiased performance measures, and then calculate the biased ones from those. For example, to estimate aggregated standard error you might do the following:

agg_perf <- sres %>%

group_by( ICC, method, alpha, size_coef ) %>%

summarise( SE = sqrt( mean( SE^2 ) ) )Because bias is linear, you do not need to worry about the MCSE. But if you are looking at the magnitude of bias (\(|bias|\)), then you can run into issues when the biases are close to zero, if they are measured noisily. For example, imagine you have two scenarios with true bias of 0.0, but your MCSE is 0.02. In one scenario, you estimate a bias of 0.017, and in the other -0.023. If you average the estimated biases, you get -0.003, which suggests a small bias as we would wish. Averaging the absolute biases, on the other hand, gives you 0.02, which could be deceptive. With high MCSE and small magnitudes of bias, looking at average bias, not average \(|bias|\), is safer.

Alternatively, you can use the formula \(RMSE^2 = Bias^2 + SE^2\) to back out the average absolute bias from the RMSE and SE.

13.4 Comparing true SEs with standardization

We just did a deep dive into bias. Uncertainty (standard errors) is another primary performance criterion of interest.

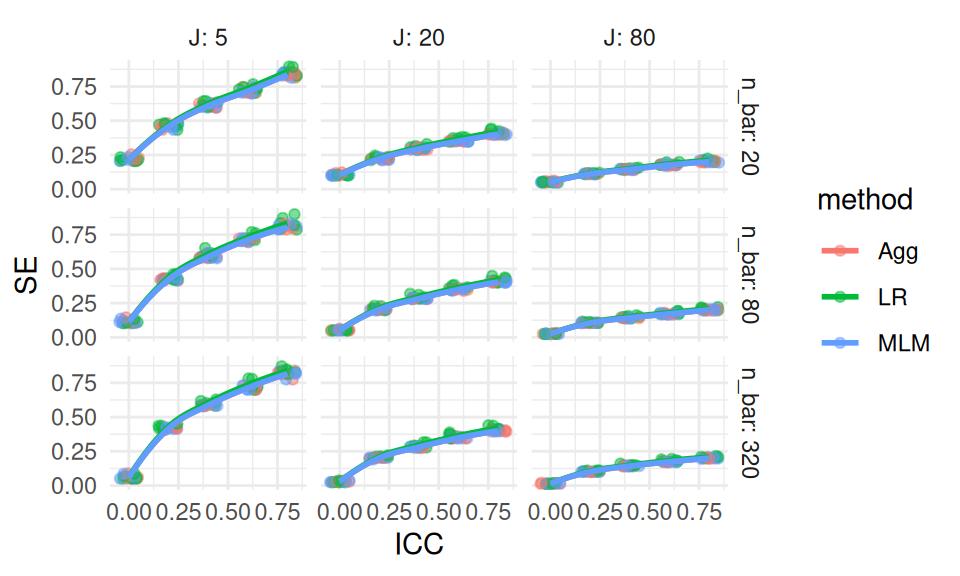

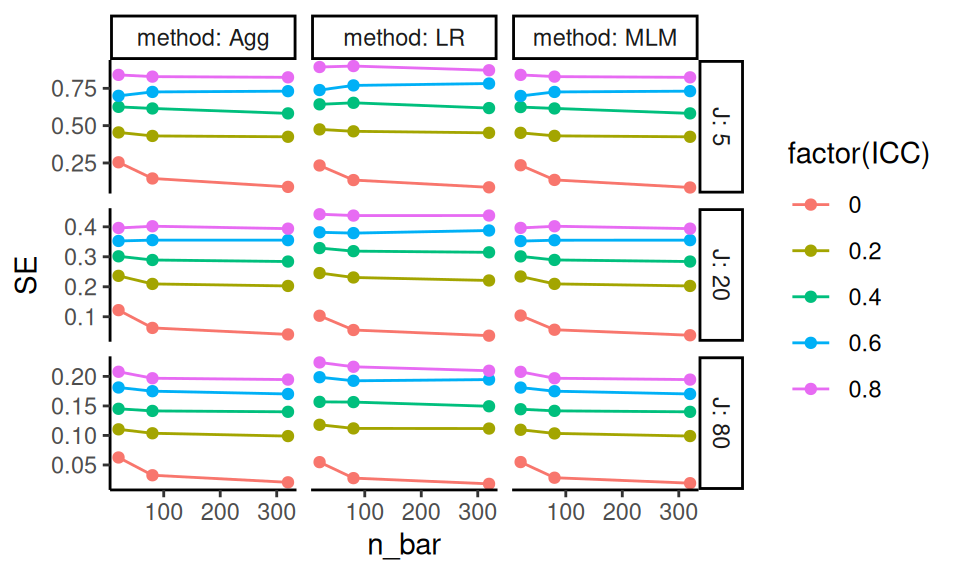

As an initial exploration, we plot the standard error estimates from our Cluster RCT simulation, using smoothed lines to visualize trends. We use ggplot’s geom_smooth to aggregate over size_coef and alpha, which we leave out of the plot.

We include individual data points to visualize variation around the smoothed estimates:

ggplot( sres, aes( ICC, SE, col=method ) ) +

facet_grid( n_bar ~ J, labeller = label_both ) +

geom_jitter( height = 0, width = 0.05, alpha=0.5 ) +

geom_smooth( se=FALSE, alpha=0.5 ) +

theme_minimal()

We observe several broad trends in the standard error behavior. First, standard error increases with the intraclass correlation (ICC). This is as expected: greater similarity within clusters reduces the effective sample size. Second, standard error decreases as the number of clusters (J) increases, which reflects the benefit of having more independent units of analysis. In contrast, increasing the cluster size (n_bar) has relatively little effect on the standard error (the rows of our facets look about the same). Lastly, all methods show fairly similar levels of standard error overall.

While we can extract all of these from the figure, the figure is still not ideal for comparing our methods. The dominant influence of design features like ICC and sample size obscures our ability to detect meaningful differences between methods. In other words, even though SE changes across scenarios, it’s difficult to tell which method is actually performing better within each scenario.

We can also view the same information by bundling over the left-out dimensions.

We put n_bar in our bundles because maybe it does not matter that much:

ggplot( sres, aes( ICC, SE, col=method, group=paste0( ICC, method ) ) ) +

facet_grid( . ~ J, labeller = label_both ) +

geom_boxplot( width = 0.2, coef = Inf) +

scale_x_continuous( breaks = unique( sres$ICC)) +

theme_minimal()

Our plots are still dominated by the strong effects of ICC and the number of clusters (J). When performance metrics like standard error vary systematically with design features, it becomes difficult to compare methods meaningfully across scenarios.

To address this, we shift our focus through standardization. Instead of noting that:

“All my SEs are getting smaller,”

we want to conclude that:

“Estimator 1 has systematically higher SEs than Estimator 2 across scenarios.”

Simulation results are often driven by broad design effects, which can obscure the specific methodological questions we care about. Standardizing helps bring those comparisons to the forefront. Let’s try that next.

One straightforward strategy for standardization is to compare each method’s performance to a designated baseline. In this example, we use Linear Regression (LR) as our baseline.

We standardize by, for each simulation scenario, dividing each method’s SE by the SE of LR, to produce SE.scale.

This relative measure, SE.scale, allows us to examine how much better or worse, across our scenarios, each method performs relative to a chosen reference method.

ssres <-

sres %>%

group_by( n_bar, J, ATE, size_coef, ICC, alpha ) %>%

mutate( SE.scale = SE / SE[method=="LR"]) %>%

ungroup()We can then treat SE.scale as a measure like any other.

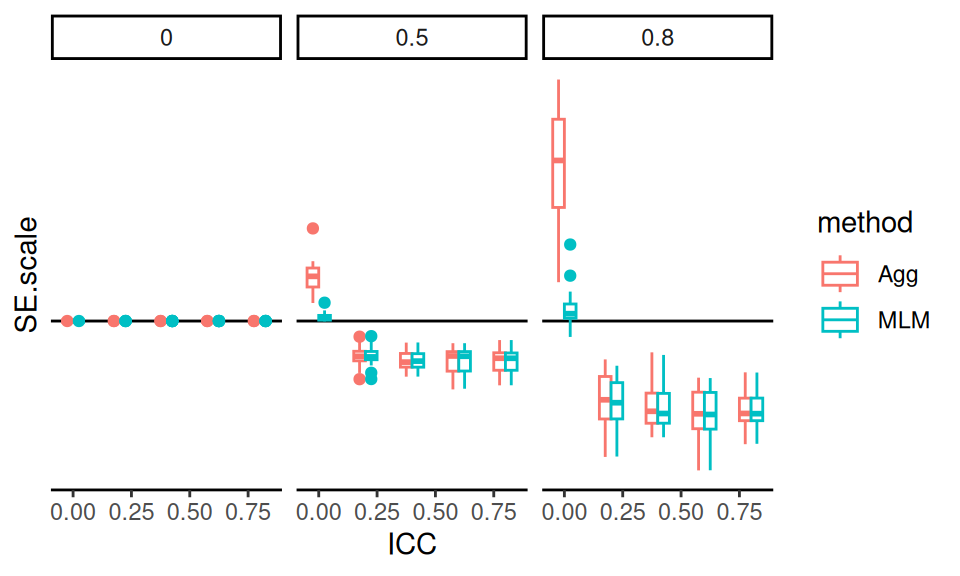

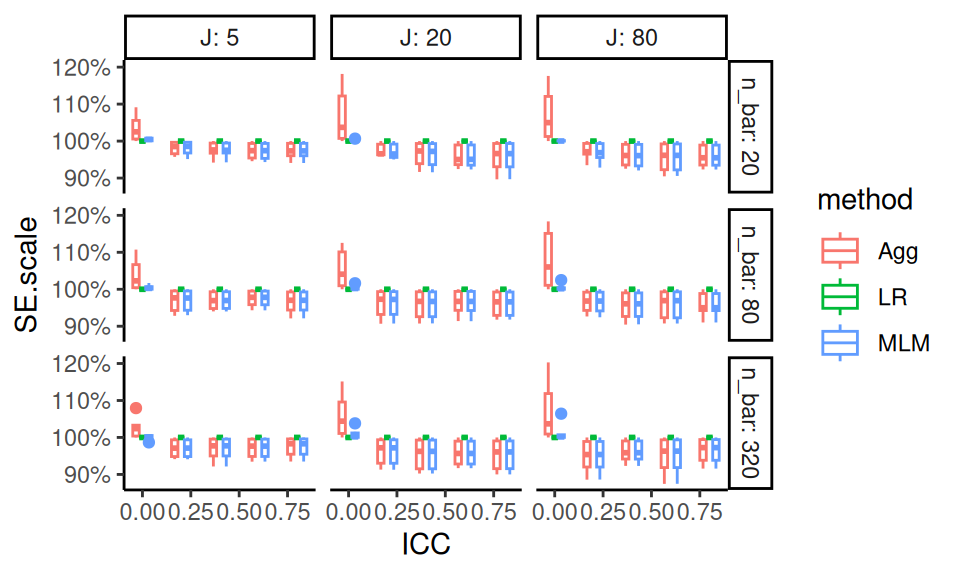

Here we bundle, showing how relative SE changes by J, n_bar and ICC:

ggplot( ssres, aes( ICC, SE.scale, col=method,

group = interaction(ICC, method) ) ) +

facet_grid( n_bar ~ J, labeller = label_both ) +

geom_boxplot( position="dodge", width=0.1 ) +

scale_y_continuous( labels = scales::percent_format() )

The figure above shows how each method compares to LR across simulation scenarios. Aggregation clearly performs worse than LR when the Intraclass Correlation Coefficient (ICC) is zero. However, when ICC is greater than zero, Aggregation yields improved precision. The Multilevel Model (MLM), in contrast, appears more adaptive. It captures the benefits of aggregation when ICC is high, but avoids the precision cost when ICC is zero. This adaptivity makes MLM appealing in practice when ICC is unknown or variable across contexts.

In looking at the plot we are seeing essentially identical rows and and fairly similar across columns.

This suggests we should bundle the n_bar to get a cleaner view of the main patterns, and that we can also bundle over J as well.

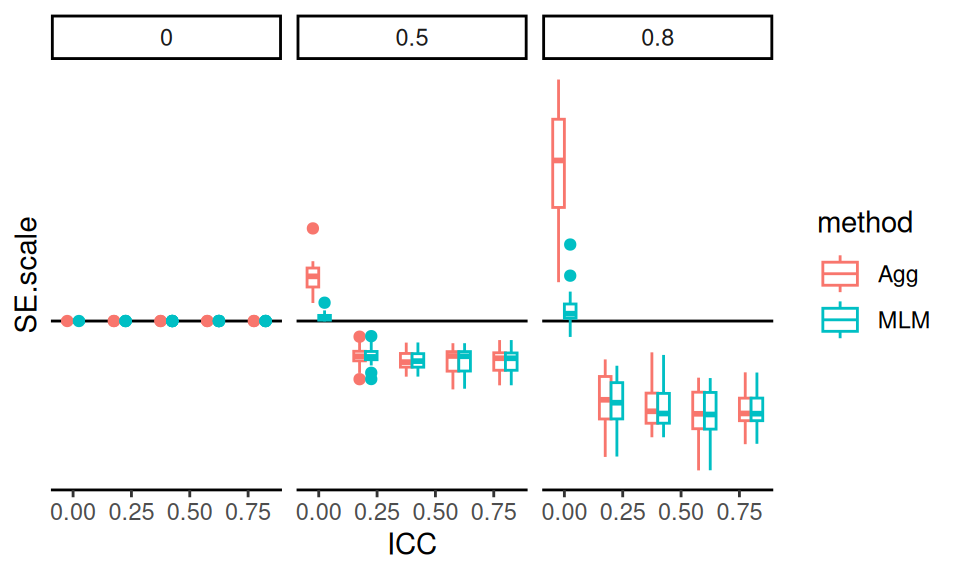

We finally drop the LR results entirely, as it is the reference method and always has a relative SE of 1.

ssres %>%

filter( method != "LR" ) %>%

ggplot( aes( ICC, SE.scale, col=method,

group = interaction(ICC, method) ) ) +

facet_grid( size_coef ~ alpha, labeller = label_both ) +

geom_hline( yintercept = 1 ) +

geom_boxplot( position="dodge", width=0.1 ) +

scale_x_continuous( breaks=unique( ssres$ICC ) ) +

scale_y_continuous( breaks=seq(90, 125, by=5 ) )

The pattern is clear: when ICC = 0, Aggregation performs worse than LR, and MLM performs about the same. But as ICC increases, Aggregation and MLM both improve, and perform about the same to each other. This highlights the robustness of MLM across diverse conditions.

As a warning regarding Monte Carlo uncertainty: when standardizing results, it is important to remember that uncertainty in the baseline measure (here, LR) propagates to the standardized values. This should be considered when interpreting variability in the scaled results. Uncertainty for relative performance is generally tricky to assess.

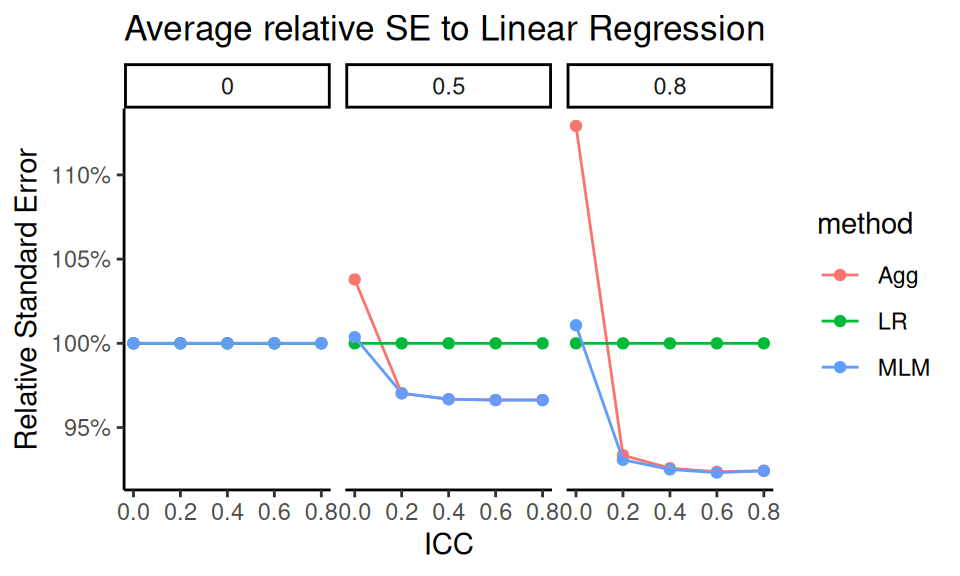

To clarify the main patterns, we then aggregate our SE.scale across the bundled simulation settings—relative performance is on the same scale, so averaging is now a natural thing to do.

We have aggregated out sample sizes, and we go further and remove size_coef since it does not seem to matter much, given the above plot:

s2 <-

ssres %>%

group_by( ICC, alpha, method ) %>%

summarise( SE.scale = mean( SE.scale ) ) %>%

filter( method != "LR" )

ggplot( s2, aes( ICC, SE.scale, col=method ) ) +

facet_wrap( ~ alpha, labeller = label_both ) +

geom_hline( yintercept = 1 ) +

geom_point() + geom_line() +

scale_y_continuous( labels = scales::percent_format() ) +

labs( title = "Average relative SE to Linear Regression",

y = "Relative Standard Error" )

Our aggregated plot of the precision of aggregation and MLM relative to Linear Regression gives a simple story clearly told. The performance of aggregation improves with ICC. MLM also has benefits over LR, and does not pay much cost when ICC is low.

13.5 The Bias-SE-RMSE plot

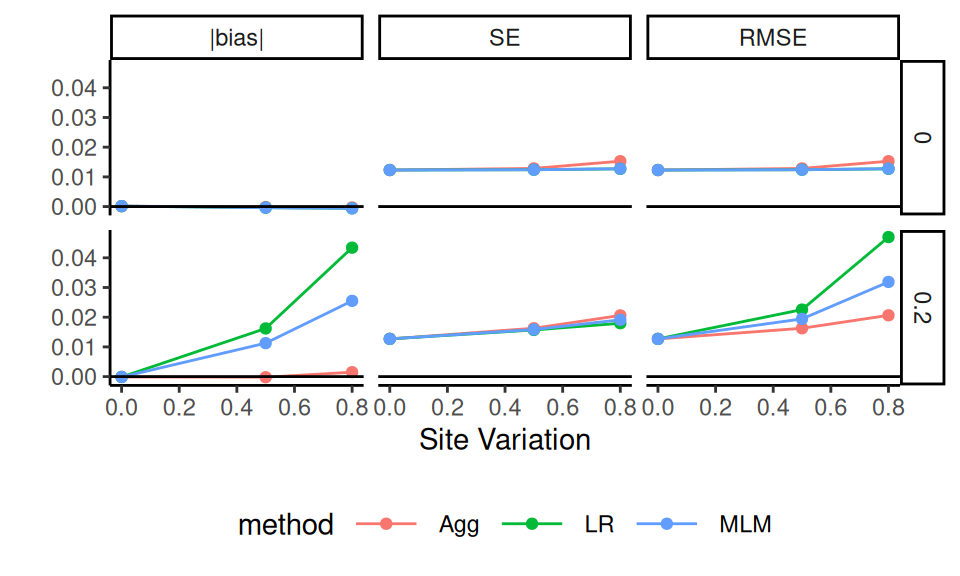

We can visualize bias and standard error together, along with RMSE, to get a rich picture of performance.

To illustrate, we subset to our scenarios where there is real bias for both LR and MLM (i.e., when ICC is 0; see findings under bias from above).

We also subset to our middle values of n_bar = 80 and our large J=80, where uncertainty is small and thus the relative role of bias may be large.

bsr <- sres %>%

filter( n_bar == 80, J==80, ICC == 0 )

bsr <- bsr %>%

dplyr::select( -R, -power, -ESE_hat, -SD_SE_hat ) %>%

pivot_longer( cols=c("bias","SE","RMSE"),

names_to = "measure",

values_to = "value" ) %>%

group_by( measure, size_coef, method, ICC, alpha ) %>%

summarise( value = mean( value ),

n = n() )

bsr$measure = factor( bsr$measure,

levels=c("bias", "SE", "RMSE"),

labels =c("bias", "SE", "RMSE" ) )

ggplot( bsr, aes( as.factor(alpha), value, col=method,

group = method )) +

facet_grid( size_coef ~ measure ) +

geom_line() + geom_point() +

labs( y = "", x = "Site Variation" ) +

geom_hline( yintercept = 0 ) +

theme( legend.position="bottom",

legend.direction="horizontal",

legend.key.width=unit(1,"cm"),

panel.border = element_blank() )

The combination of bias, standard error, and RMSE provides a rich and informative view of estimator performance. The top row represents settings where effect size is independent of cluster size, while the bottom row reflects a correlation between size and effect. We see how bias, SE and RMSE grow as site variation increases (moving rightward in each panel). Notably, when effect size is related to cluster size (bottom row), both linear regression and MLM exhibit significant bias, leading to notable increase in RMSE over SE. In contrast, when effect size is unrelated to cluster size (top row), all methods show minimal bias, and the SEs are about the same; that said, we see aggregation paying a penalty as variation cluster size increases. Overall, we see RMSE is primarily driven by SE.

The Bias-SE-RMSE visualization directly illustrates the canonical relationship:

\[ \text{RMSE}^2 = \text{Bias}^2 + \text{SE}^2 \]

The plot shows overall performance (RMSE) decomposed into into its two fundamental components: systematic error (bias) and variability (standard error). Here we see how bias for LR, for example, is dominant when site variation is high. The differences in SE across methods are small and are thus not the main reason for differences in overall estimator performance; bias is the main driver.

This is the kind of diagnostic plot we often wish were included in more applied simulation studies.

13.6 Assessing the quality of the estimated SEs

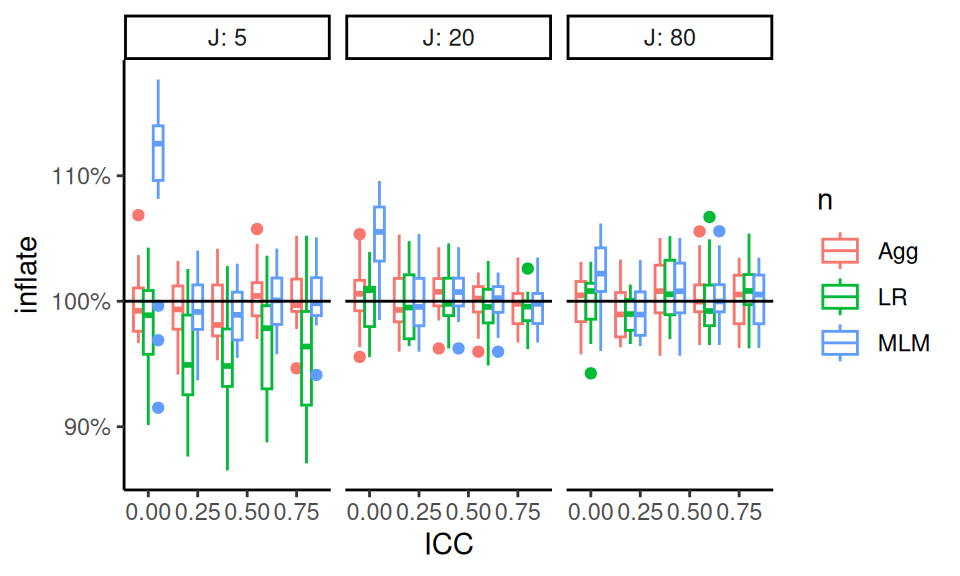

So far we have examined the performance of our point estimators. We next look at ways to assess our estimated standard errors. A good first question is whether they are about the right size, on average, across all the scenarios.

When assessing estimated standard errors it is very important to see if they are reliably the right size, making the bundling method an especially important tool here. We first see if the average estimated SE, relative to the true SE, is usually around 1 across all scenarios:

sres <- sres %>%

mutate( inflate = ESE_hat / SE )

ggplot( sres,

aes( ICC, inflate, col=method,

group = interaction(ICC,method) ) ) +

facet_grid( . ~ J, labeller = label_both) +

geom_boxplot( position="dodge", outlier.size=0.5 ) +

geom_hline( yintercept=1 ) +

labs( color="n", y = "Inflation" ) +

scale_y_continuous( labels = scales::percent_format() )

We see that, for the most part, our estimated SEs are about right, on average, across all scenarios. When the ICC is 0 and J is small, the MLM SEs are clearly too high. We also see that when J is 5, the LR estimator tends to be a bit low.

We next start exploring to dig into why our boxplots are wide.

In particular, we want to see if other factors dictate when the SEs are biased.

We first subset to the \(J = 80\) scenarios to see if those box widths could just be due to the MCSEs.

The simhelpers calc_relative_var() method gives mcses for relative bias of an estimated variance to the true variance.

We thus square our estimated SEs to get variance estimates, and then use that function to see if the relative variance estimates are biased:

se_res <- res %>%

group_by( n_bar, J, ATE, size_coef, ICC, alpha, method ) %>%

summarize( calc_relative_var( estimates = ATE_hat,

var_estimates = SE_hat^2,

criteria = "relative bias" ) )

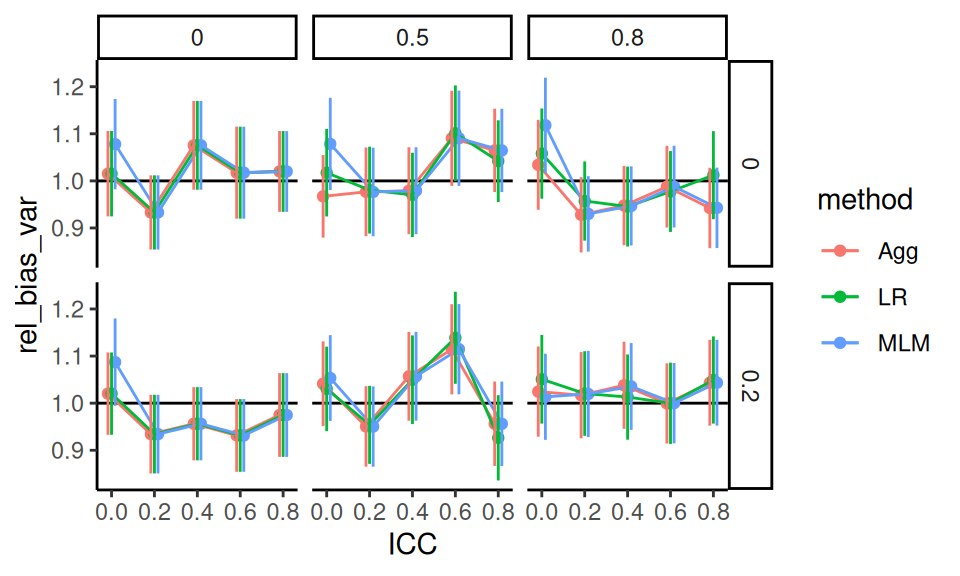

se_res %>%

filter( J == 80, n_bar == 80 ) %>%

ggplot( aes( ICC, rel_bias_var, col=method ) ) +

facet_grid( size_coef ~ alpha ) +

geom_hline( yintercept = 1 ) +

geom_point( position = position_dodge( width=0.05) ) +

geom_line( position = position_dodge( width=0.05) ) +

geom_errorbar( aes( ymin = rel_bias_var - 1.96*rel_bias_var_mcse,

ymax = rel_bias_var + 1.96*rel_bias_var_mcse ),

position = position_dodge( width=0.05 ),

width = 0 )

In looking at this plot, we see no real evidence of miscalibration: our confidence intervals are generally covering 1, meaning our average estimated variance is about the same as the true variance. This makes us think the boxes for \(J=80\) in the prior plot are wide due to MCSE rather than other simulation factors driving some slight miscalibration. We might then assume this applies to the \(J = 20\) case as well.

13.6.1 Stability of estimated SEs

We can also look at how stable the estimated SEs are, relative to the actual uncertainty they are trying to capture.

We do this by calculating the standard deviation of the estimated standard errors and compare that to the standard deviation of the point estimate.

This is related to the coefficient of variation of SE_hat.

sres <- mutate( sres,

SD_SE_hat_rat = SD_SE_hat / SE )

ggplot( sres,

aes( ICC, SD_SE_hat_rat, col=method,

group = interaction(ICC,method) ) ) +

facet_grid( . ~ J, labeller = label_both) +

geom_boxplot( position="dodge" ) +

labs( color="n" ) +

scale_y_continuous( labels = scales::percent_format() ) +

scale_x_continuous( breaks = unique( sres$ICC ) )

Overall, we have a lot of variation in the estimated SEs, relative to the actual uncertainty. We also see that MLM has more reliably estimated SEs than other methods when ICC is small. Aggregation has relatively more trouble estimating uncertainty when J is small. Finally, LR’s SEs are slightly more unstable, relative to the other methods, when \(J\) is larger.

Assessing the stability of standard errors is usually very in the weeds of a performance evaluation. It is a tricky measure: if the true SE is high for a method, then the relative instability will be lower, even if the absolute instability is the same. Thinking through what might be driving what can be difficult, and is often not central to the main purpose of an evaluation. People often look at confidence interval coverage and confidence interval width, instead, to assess the quality of estimated SEs.

13.7 Assessing confidence intervals

Coverage is a blend of how accurate (unbiased) our estimates are and how good our estimated SEs are.

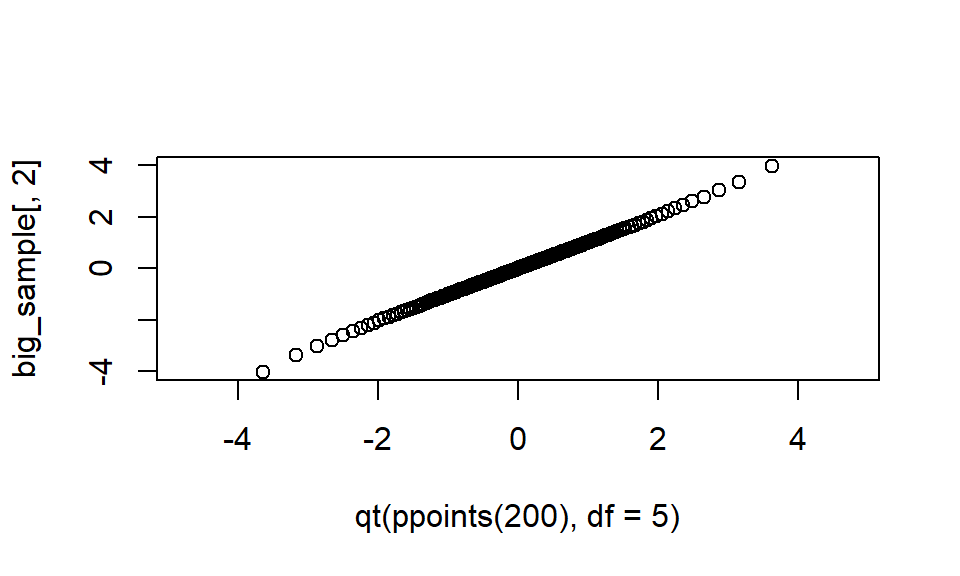

To assess coverage, we first calculate confidence intervals using the estimated effect, estimated standard error, and degrees of freedom.

Once we have our calculated \(t\)-based intervals, we can average them across runs to get average width and coverage using simhelpers’s calc_coverage() method.

A good confidence interval estimator would be one which is generally relatively short while maintaining proper coverage.

Our calculations are as so:

res$df = res$J

res <- mutate( res,

tstar = qt( 0.975, df=df ),

CI_low = ATE_hat - tstar*SE_hat,

CI_high = ATE_hat + tstar*SE_hat,

width = CI_high - CI_low )

covres <- res %>%

group_by( n_bar, J, ICC, alpha, size_coef, method, ATE ) %>%

summarise( calc_coverage( lower_bound = CI_low,

upper_bound = CI_high,

true_param = ATE ) ) %>%

ungroup()We then look at those simulations with a relationship of site size and impact.

We subset to n_bar = 80, size_coef = 0.2 and alpha = 0.5 to simplify for our initial plot:

c_sub <- covres %>%

dplyr::filter( size_coef != 0, n_bar == 80, alpha == 0.5 )

ggplot( c_sub, aes( ICC, coverage, col=method, group=method ) ) +

facet_grid( . ~ J, labeller = label_both ) +

geom_line( position = position_dodge( width=0.05)) +

geom_point( position = position_dodge( width=0.05) ) +

geom_errorbar( aes( ymax = coverage + 2*coverage_mcse,

ymin = coverage - 2*coverage_mcse ), width=0,

position = position_dodge( width=0.05) ) +

geom_hline( yintercept = 0.95 )

Generally coverage is good unless \(J\) is low or ICC is 0. Monte Carlo standard error based confidence intervals on our performance metrics indicate that, in some settings, the observed coverage is reliably different from the nominal 95%, suggesting issues with estimator bias, standard error estimation, or both. We might then want to see if these results are general across the other simulation scenarios (see exercises).

For confidence interval width, we can calculate the average width relative to the width of LR across all scenarios:

covres <- covres %>%

group_by( n_bar, J, ICC, alpha, size_coef ) %>%

mutate( width_rel = width / width[method=="LR"] ) %>%

ungroup()

c_agg <- covres %>%

group_by( ICC, J, alpha, method ) %>%

summarise( coverage = mean( coverage ),

coverage_mcse = sqrt( mean( coverage_mcse^2 ) ) / n(),

width_rel = mean( width_rel ) ) %>%

filter( method != "LR" )

ggplot( c_agg, aes( ICC, width_rel, col=method, group=method ) ) +

facet_grid( J ~ alpha ) +

geom_hline( yintercept = 1 ) +

geom_line() + geom_point() +

labs( y = "Relative CI Width",

title = "Average Relative CI Width to Linear Regression" ) +

scale_y_continuous( labels = scales::percent_format() )

Confidence interval width serves as a proxy for precision. Narrow intervals suggest more precise estimates. We see MLM has wider intervals, relative to LR, when ICC is low. When there is site variation, both Agg and MLM have shorter intervals. This plot essentially echos our standard error findings, as expected. There are mild differences due to differences in how the degrees of freedom are calculated, however.

13.8 Exercises

In these exercises we continue to work with the simulation results from the Cluster RCT example in this chapter.

We provide the saved simulation results in the file simulation_CRT.rds, and load and process them as follows:

library( tidyverse )

library( simhelpers )

res <- readRDS( file = "results/simulation_CRT.rds" )

head( res )## # A tibble: 6 × 13

## n_bar J ATE size_coef ICC alpha reps

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 20 5 0.2 0 0 0 1000

## 2 20 5 0.2 0 0 0 1000

## 3 20 5 0.2 0 0 0 1000

## 4 20 5 0.2 0 0 0 1000

## 5 20 5 0.2 0 0 0 1000

## 6 20 5 0.2 0 0 0 1000

## # ℹ 6 more variables: seed <dbl>, runID <chr>,

## # method <chr>, ATE_hat <dbl>, SE_hat <dbl>,

## # p_value <dbl>sres <- res %>%

group_by( n_bar, J, ATE, size_coef, ICC, alpha, method ) %>%

summarise( calc_absolute( estimates = ATE_hat,

true_param = ATE,

criteria = c("bias","stddev",

"rmse")),

calc_relative_var( estimates = ATE_hat,

var_estimates = SE_hat^2,

criteria = "relative bias" ),

power = mean( p_value <= 0.05 ),

ESE_hat = sqrt( mean( SE_hat^2 ) ),

SD_SE_hat = sqrt( sd( SE_hat^2 ) ),

.groups = "drop"

) %>%

rename( R = K_absolute,

RMSE = rmse,

RMSE_mcse = rmse_mcse,

SE = stddev,

SE_mcse = stddev_mcse ) %>%

dplyr::select( -K_relvar ) %>%

ungroup()

names( sres )## [1] "n_bar" "J"

## [3] "ATE" "size_coef"

## [5] "ICC" "alpha"

## [7] "method" "R"

## [9] "bias" "bias_mcse"

## [11] "SE" "SE_mcse"

## [13] "RMSE" "RMSE_mcse"

## [15] "rel_bias_var" "rel_bias_var_mcse"

## [17] "power" "ESE_hat"

## [19] "SD_SE_hat"13.8.1 Assessing uncertainty

Select a plot from this chapter that does not have monte carlo standard errors. Add monte carlo standard errors to that selected plot.

13.8.2 Assessing power

Make a plot showing how power changes as \(J\) changes. As an extra step, add MCSEs to the plot to assess whether you have a reasonable numbers of replications in the simulation.

13.8.3 Going deeper with coverage

For our cluster RCT, we saw coverage is low in some circumstances. Explore the full set of simulation results, possibly adding your own analyses, to ascertain why coverage is low. Is it due to estimator bias? Unreliably estimated standard errors? Something else? Make a final plot and draft a short write-up that captures how coverage is vulnerable for the three estimators.

13.8.4 Pearson correlations with a bivariate Poisson distribution

In Section 10.3, we generated results for a multifactor simulation of confidence intervals for Pearson’s correlation coefficient under a bivariate Poisson data-generating process. Create a plot that depicts the coverage rate of the confidence intervals for \(\rho\) across all four simulation factors. Write a brief explanation of how the plot is laid out and explain why you chose to construct it as you did.